This week it was Jamie’s turn to give a talk – on Probabilistic Programming, an alternative to the black-box magic of neural networks. He initially highlighted some of the pitfalls of traditional neural networks, primarily the large amount of data required to train these models and the lack of interpretability of the internals of the model.

Probabilistic programming instead allows us to encode probability distributions in our program rather than single values. This means we can kickstart our learning by starting with our prior beliefs about a problem. The model is also more interpretable, since we can determine the uncertainty of the model based on the posterior distribution.

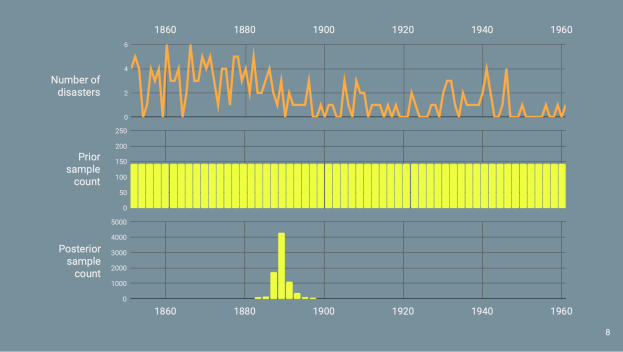

Jamie then provided the motivating example of coal mining disasters, where we could use probabilistic programming to determine the switchpoint year when average number of disasters dropped from a high level to a low level. He showed that even with a simple uniform prior (that all years were equally likely to be the switchpoint) the model was able to accurately predict the correct posterior distribution.